The Carbon Footprint of Machine Learning

Concerns around the growing carbon footprint of AI as a potential contributor to climate change require action now. Carbon neutral is the new black.

AI and machine learning (ML) news are everywhere we turn today, impacting virtually every industry from healthcare, finance, retail, agriculture, defense, automobile, and even social media. The solutions are developed using a form of ML called neural networks which are called “deep” when multiple layers of abstraction are involved. Deep Learning (DL) could be the most important software breakthrough of our time. Until recently, humans programmed all software. Deep learning, is a form of artificial intelligence (AI), that uses data to write software and typically “learns” from large volumes of reference training data.

Andrew Ng, Baidu’s Chief Scientist and Co-founder of Coursera, has called AI the new electricity. Much like the internet, deep learning will have broad and deep ramifications. Like the internet, deep learning is relevant for every industry, not just for the computing industry.

The internet made it possible to search for information, communicate via social media, and shop online. Deep learning enables computers to understand photos, translate language, diagnose diseases, forecast crops, and drive cars. The internet has been disruptive to media, advertising, retail, and enterprise software. Deep learning could change the manufacturing, automotive, health care, and finance industries dramatically.

By “automating” the creation of software, deep learning could turbocharge every industry, and today we see it is transforming our lives in so many ways. Deep learning is creating the next generation of computing platforms, e.g.

- Conversational Computers: Powered by AI, smart speakers answered 100 billion voice commands in 2020, 75% more than in 2019.

- Self-Driving Cars: Waymo's autonomous vehicles have collected more than 20 million real-world driving miles across 25 cities, including San Francisco, Detroit, and Phoenix.

- Consumer Apps: We are familiar with recommendation engines that learn from all our digitally recorded behavior, and drive our product, services, and entertainment choices. They control our personalized views of ads that we are exposed to and are the primary sources of revenue for Google, Facebook, and others. Often using data that we did not realize was being used without our consent. But it can build market advantage, for example, TikTok, which uses deep learning for video recommendations, has outgrown Snapchat and Pinterest combined.

According to ARK Investment research, deep learning will add $30 trillion to the global equity market capitalization over the next 15-20 years. They estimate that the ML/DL-driven revolution is as substantial a transformation of the world economy as the IT Computing to Internet Platforms change was in the late ’90s, and predict that Deep Learning will the dominant source of market capital creation over the coming decades.

Three factors drive the advance of AI: algorithmic innovation, data, and the amount of computing capacity available for training. Though we are seeing substantial improvements in computing and algorithmic efficiency, the data volumes are also increasing dramatically, and some recent Large Language Models (LLMs) innovation from OpenAI (GPT-3), Google (BERT), and others show that there is a significant resource usage impact from this approach.

"If we were able to give the best 20,000 AI researchers in the world the power to build the kind of models you see Google and OpenAI build in language; that would be an absolute crisis, there would be a need for many large power stations."

Andrew Moore, GM Cloud Ops Google Cloud

This energy-intensive workload has seen immense growth in recent years. Machine learning (ML) may become a significant contributor to climate change if this exponential trend continues. Thus, while there are many reasons to be optimistic about the technological progress we are making, it is also wise to both consider what can be done to reduce the carbon footprint, and take meaningful action to address this risk.

The Problem: Exploding Energy Use & The Growing Carbon Footprint

Lasse Wolff Anthony, one of the creators of Carbontracker and co-author of a study of the subject of AI power usage, believes this drain on resources is something the community should start thinking about now, as the energy costs of AI have risen 300,000-fold between 2012 and 2018.

They estimated that training OpenAI’s giant GPT-3 text-generating model is akin to driving a car to the Moon and back, which is about 700,000 km or 435,000 miles. They estimate it required roughly 190,000 kWh, which using the average carbon intensity of America would have produced 85,000 kg of CO2 equivalents. Other estimates are even higher.

“As datasets grow larger by the day, the problems that algorithms need to solve become more and more complex," Benjamin Kanding, co-author of the study, added. “Within a few years, there will probably be several models that are many times larger than GPT-3.”

The financial cost for training GPT-3 reportedly cost $12 million for a single training run. However, this is only possible after reaching the right configuration for GPT-3. Training the final deep learning model is just one of several steps in the development of GPT-3. Before that, the AI researchers had to gradually increase layers and parameters, and fiddle with the many hyper-parameters of the language model until they reached the right configuration. That trial-and-error gets more and more expensive as the neural network grows. We can’t know the exact cost of the research without more information from OpenAI, but one expert estimated it to be somewhere between 1.5X and 5X the cost of training the final model.

This would put the cost of research and development between $11.5 million and $27.6 million, plus the overhead of parallel GPUs. This does not even include the cost of human expertise which is also substantial.

OpenAI has stated that while the training cost is high, the running costs would be much lower but access will only be possible through an API as few could invest in the hardware needed to run it regularly. The efforts to develop the potentially improved GPT-4 which is 500+ times larger than GPT-3 are estimated will cost more than $100 million just in training costs!

The ACL community has also made some recommendations for the ML community to help reduce the carbon footprint, e.g. Increasing the alignment between experiments and research hypotheses, reducing the focus on leaderboards, and increasing the focus on experimental efficiency.

These costs mean that this kind of initiative can only be attempted by a handful of companies with huge market valuations. It also suggests that today’s AI research field is inherently non-collaborative. The research approach of “obtain the dataset, create model, beat present state-of-the-art, rinse, repeat” makes it so that there is a big barrier to entry to the market for new researchers and researchers with low computational resources.

Ironically, a company with the word “open” in the name has now chosen to not release the architecture and the pre-trained model. The company has opted to commercialize the deep learning model instead of making it freely available to the public.

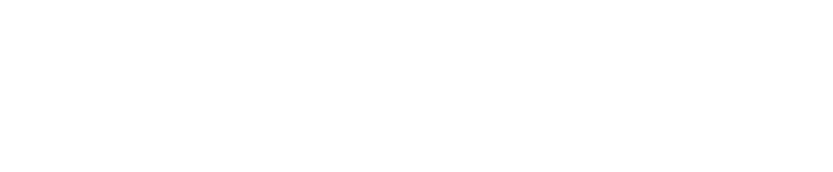

So how is this trend to large models likely to progress? While advances in hardware and software have been driving down AI training costs by 37% per year, the size of AI models is growing much faster, 10x per year. As a result, total AI training costs continue to climb. Researchers believe that state-of-the-art AI training model costs are likely to increase 100-fold, from roughly $1 million today to more than $100 million by 2025. The training cost outlook from Ark Investments is shown below in log scale, where you can also see how the original NMT efforts compare to GPT-3.

Training a powerful machine-learning algorithm often means running huge banks of computers for days, if not weeks. The fine-tuning required to perfect an algorithm, by for example searching through different neural network architectures to find the best one, can be especially computationally intensive. For all the hand-wringing, though, it remains difficult to measure how much energy AI consumes and even harder to predict how much of a problem it could become.

There have been several efforts in 2021 to build even bigger models than GPT-3. All probably with a huge carbon footprint. But there is good news, Chinese tech giant Alibaba announced M6, a massive model that has 10 trillion parameters (50x the size of GPT-3). However, they managed to train it at 1% of the energy consumption needed to train GPT-3!

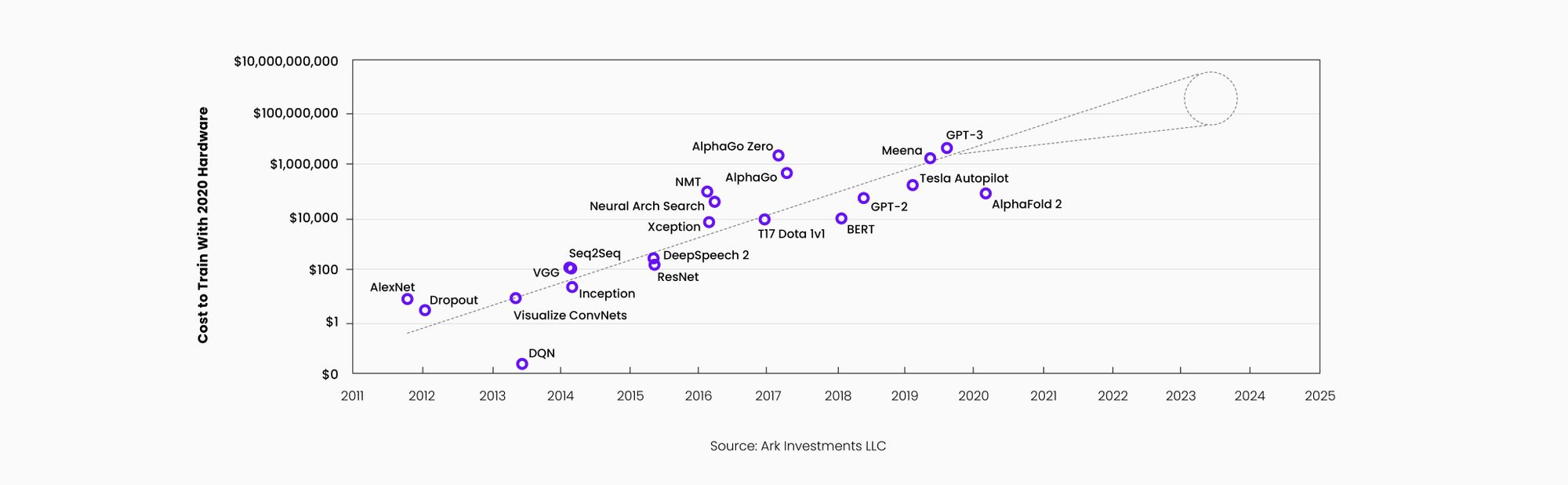

Another graphic that illustrates the carbon impact that deep learning generates as model enhancement efforts are made is shown below. All deep learning models have ongoing activity directed at reducing the error rates of existing models as a matter of course. An example with Neural MT is when a generic model is adapted or customized with new data.

Subsequent efforts to improve accuracy and reduce error rates in models often need additional data, re-training, and processing. The chart below shows how much energy is needed to reduce the model error rates for image recognition on the ImageNet model. As we can see that the improvement process for large models has an environmental impact that is substantial and needs to be considered.

There is ongoing research and new startups focused on more efficient training and improvement techniques, lighter footprint models that are just slightly less accurate, and more efficient hardware. All of these are needed and will hopefully help reverse or reduce the 300,000x increase in deep learning-driven energy use of the last 5 years.

Here is another site that lets AI researchers roughly calculate the carbon footprint of their algorithms.

And as the damage caused by climate change becomes more apparent, AI experts are increasingly troubled by those energy demands. Many of the deep learning initiatives shown in the first chart above are being conducted in the Silicon Valley area in Northern California. This is an area that has witnessed several catastrophic climate events in the recent past:

- Seven of the ten largest recorded forest wildfires in California have happened in the last three years!

- In October 2021 the Bay area also witnessed a “bomb cyclone” rain event after a prolonged drought that produced the largest 24-hour rainfall in San Francisco since the Gold Rush!

The growing awareness of impending problems is raising awareness in big tech companies about implementing carbon-neutral strategies. Many consumers are now demanding that their preferred brands take action to show awareness and move toward carbon neutrality.

Climate Neutral Certification gives businesses and consumers a way to a net-zero future and also builds brand loyalty and advocacy. Looking at a list of some committed public companies shows that this is now recognized as a brand-enhancing and market momentum move.

Uber and Hertz recently announced a dramatic expansion of their electric vehicle fleet and received much positive feedback from customers and the market.

Carbon Neutral Is The New Black.

What Sustainability Means to Translated Srl

In the past few years, Translated’s energy consumption linked to AI tasks has increased exponentially, and it now accounts for two-thirds of the company’s total energy consumption. Training a translation model for a single language can produce as much CO2 as driving a car for thousands of kilometers.

A large model produces as much CO2 as hundreds of airline flights would. This is why Translated is pledging to become a completely carbon-neutral company.

"How? Water is among the cleanest energy sources out there, so we have decided to acquire one of the first hydroelectric power plants in Italy. This plant was designed by Albert Einstein’s father in 1895. We are adapting and renovating this historic landmark, and eventually, it will produce over 1 million kW of power a year, which will be sufficient to cover the needs of our data center, campus, and beyond."

Additionally, the overall architecture of ModernMT minimizes the need for large energy-intensive re-training and the need for maintenance of multiple client-specific models that is typical of most MT deployments today.

Global enterprises may have multiple subject domains and varied types of content so multiple optimizations are needed to ensure that MT performs well. Typical MT solutions require different MT engines for web content, technical product information, customer service & support content, and user-generated and community content for each language.

ModernMT can handle all these adaptation variants with a single-engine that can be differently optimized.

- ModernMT is a ready-to-run application that does not require any initial training phase. It incorporates user-supplied resources immediately without needing model retraining.

- ModernMT learns continuously and instantly from user feedback and corrections made to MT output as production work is done. It produces output that improves by the day and even the hour in active-use scenarios.

- The ModernMT system manages context automatically and does not require building domain-specific systems.

ModernMT is perhaps the most flexible and easy to manage enterprise-scale MT in the industry.

ModernMT’s goal is to deliver the quality of multiple custom engines by adapting to the provided context on the fly. This makes it much easier to manage on an ongoing basis as only a single engine is needed. This reduces the training need, hence carbon footprint, and makes it easier to manage and update over time.

As described before, a primary driver of improvement in ModernMT is the tightly integrated human-in-the-loop feedback process which provides continuous improvements in model performance, but yet greatly reduces the need for large-scale retraining.

ModernMT is a relatively low footprint approach to continuously learning NMT that we hope to make even more energy efficient in the future.

ModernMT is a product by Translated.